The industry news is always full of big events like mergers, bankruptcies, new regulations, or regulations killed. I’ve written many blogs about these kinds of issues, but I have rarely written about the unintended consequences of big industry changes. Today’s blog looks at two examples of unintended consequences.

The industry news is always full of big events like mergers, bankruptcies, new regulations, or regulations killed. I’ve written many blogs about these kinds of issues, but I have rarely written about the unintended consequences of big industry changes. Today’s blog looks at two examples of unintended consequences.

The first is the decision by EchoStar to abandon the facility-based cellular business. There were several factors that led to the company’s decision to abandon the business line, but the company says the primary reason was pressure from the FCC to use the spectrum it owned or return it to the FCC for auction. The FCC was also pressuring the company to build faster and to get more customers.

One of the unintended consequences of the FCC nudging EchoStar out of the cellular business is that the company decided in 2025 that its best option for maximizing value was to sell the spectrum it planned to use for cell towers. The company sold spectrum to Starlink that will support the company’s entry into the satellite cellular business. EchoStar also sold spectrum to AT&T, which was put to immediate use to boost bandwidth at 23,000 cell sites nationwide. Both of these consequences are positive for the industry and will benefit many millions of customers.

Another consequence of EchoStar abandoning cell towers is that the company walked away from a huge number of long-term leases for space on cell towers. A group of ten tower company executives met with the FCC recently and asked for help to recover the abandoned payments from EchoStar. The company says it had no choice but to walk away from the leases since it is no longer using towers, and they say this fits the “force majeure” clause in its contracts with tower owners that excuse payments in the case of an unforeseeable event. It’s going to be interesting to see if the FCC does anything, or even if they have any authority to intervene in a business contractual dispute. This same thing happens all of the time on a smaller scale when carriers and ISPs walk away from leases they no longer need, and the only real difference in the case is the magnitude of the issue. My bet is that the FCC will do nothing since this is now a commercial contract dispute, and it will probably tell tower owners to take their claims to court.

Another big piece of news is Verizon’s purchase of Frontier Communications. The purchase process started sixteen months before the deal finally closed, and much has changed in the industry since then. When the transaction was first announced, CEO Hans Vestberg touted the sale as moving Verizon forward in pursuing convergence. That’s the new industry phrase that replaces the old triple-play strategy and now refers to bundling broadband and cellphones.

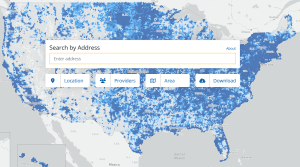

Companies generally pursue mergers in an attempt to boost stock prices, and it will be interesting to see if that happens for Verizon. There are already industry analysts panning the merger, saying that it doesn’t really move the needle for Verizon. Pre-transaction, Verizon’s fiber covers 9.2% of the country, and Frontier brings another 4.3% coverage. This pales against the cable companies that lead in the convergence battle, with the biggest cable companies collectively passing 90% of households in the country.

There are also other consequences when companies merge. Verizon will claim it’s gaining efficiencies from the merger, but the real consequence is that a lot of folks at Frontier will lose jobs that would have been safe without the merger. Many of the vendors and suppliers that supported Frontier will suddenly find they have lost a giant customer. It’s likely that eventually the prices of the products at the two companies will be brought into synch, and since Verizon’s fiber prices are higher than Frontier’s, it probably means eventual price increases for Frontier customers.