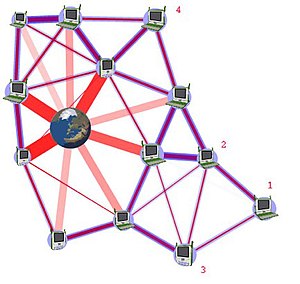

A question you hear from time to time is how vulnerable the US Internet backbone is in terms of losing access if something happens to the major hubs. The architecture of the Internet has grown in response to the way that carriers have decided to connect to each other and there has never been any master plan for the best way to design the backbone infrastructure.

The Internet in this country is basically a series of hubs with spokes. There are a handful of large cities with major regional Internet hubs like Los Angeles, New York, Chicago, Dallas, Atlanta, and Northern Virginia. And then there are a few dozen smaller regional hubs, still in fairly large cities like Minneapolis, Seattle, San Francisco, etc.

Back in 2002 some scientists at Ohio State studied the structure of the Internet at the time and said that crippling the major hubs would have essentially crippled the Internet. At that time almost all Internet traffic in the country routed through the major hubs, and crippling a few of them would have wiped out a lot of the Internet.

Later in 2007 scientists at MIT looked at the web again and they estimated that taking out the major hubs would wipe out about 70% of the US Internet traffic, but that peering would allow about 33% of the traffic to keep working. And at that time peering was somewhat new.

Since then there is a lot more peering, but one has to ask if the Internet is any safer from catastrophic outage as it was in 2007? One thing to consider is that a lot of the peering happens today at the major Internet hubs. In those locations the various carriers hand traffic between each other rather than paying fees to send the traffic through an ‘Internet Port’, which is nothing more than a point where some carrier will determine the best routing of the traffic for you.

And so peering at the major Internet hubs is great way to save money, but it doesn’t really change the way the Internet traffic is routed. My clients are smaller ISPs, and I can tell you how they decide to route Internet traffic. The smaller ones find a carrier who will transport it to one of the major Internet hubs. The larger ones can afford diversity, and so they find carriers who can carry the traffic to two different major Internet hubs. But by and large every bit of traffic from my clients goes to and through the handful of major Internet hubs.

And this makes economic sense. The original hubs grew in importance because that is where the major carriers at the time, companies like MCI and Qwest already had switching hubs. And because transport is expensive, every regional ISP sent their growing internet traffic to the big hubs because that was the cheapest solution.

If anything, there might be more traffic routed through the major hubs today than there was in 2007. Every large fiber backbone and transport provider has arranged their transport networks to get traffic to these locations.

In each region of the country my clients are completely reliant on the Internet hubs. If a hub like the one in Dallas or Atlanta went down for some reason, ISPs that send traffic to that location would be completely isolated and cut off from the world.

There was a recent report in the Washington Post that said that the NSA had agents working at only a handful of major US Internet pops because that gave them access to most of the Internet traffic in the US. That seems to reinforce the idea that the major Internet hubs in the country have grown in importance.

In theory the Internet is a disaggregated, decentralized system and if traffic can’t go the normal way, then it finds another path to take. But this idea only works assuming that ISPs can get traffic to the Internet in the first place. A disaster that takes out one of the major Internet hubs would isolate a lot of towns from the region around it from having any Internet access. Terrorist attacks that take out more than one hub would wipe out a lot more places.

Unfortunately there is no grand architect behind the Internet that is looking at these issues because no one company has any claim to deciding how the Internet workd. Instead the carriers involved have all migrated to the handful of locations where it is most economical to interconnect with each other. I sure hope, at least, that somebody has figured out how to make those hub locations as safe as possible.